Twitter and Oracle

![]() It really does surprise me how something as simple as Twitter could be used in ways I never thought of or imagined. One clever use of this technology is something Lewis Cunningham at the Database Geek blog has shared in a post I stumbled over last week entitled Ora_Tweet – Tweet From Oracle, A PL/SQL Twitter API . Simple and elegant, he has shared an idea that would let him make posts from the database to help keep him informed of long running processes. He uses a special twitter account to spare all who follow him from seeing when his processes are finished. This clever piece of code allows him to follow that account and the messages as they come across with a twitter client on his blackberry. Lewis provides the code in his post so I encourage you to visit his site and take a quick look at this clever use of Twitter.

It really does surprise me how something as simple as Twitter could be used in ways I never thought of or imagined. One clever use of this technology is something Lewis Cunningham at the Database Geek blog has shared in a post I stumbled over last week entitled Ora_Tweet – Tweet From Oracle, A PL/SQL Twitter API . Simple and elegant, he has shared an idea that would let him make posts from the database to help keep him informed of long running processes. He uses a special twitter account to spare all who follow him from seeing when his processes are finished. This clever piece of code allows him to follow that account and the messages as they come across with a twitter client on his blackberry. Lewis provides the code in his post so I encourage you to visit his site and take a quick look at this clever use of Twitter.

Of course most of us have relied on email or other transports to distribute these kinds of alerts for years. This is not rocket science, but does demonstrate how resourceful, clever people can use technology like this in ways I’m certain the original designers never anticipated. Or myself for that matter <g>. Well done Lewis thanks for sharing this with all of us.

SQL Server Worst Practices

It’s Friday the 13th and we get a surprisingly good, tongue-in-cheek post from Edgewood Solutions Engineers addressing SQLServer Worst Practices published at MsSQLTips. And not just the top ten (with apologies to Letterman) but thirteen well written and supported practices found in the wild that you most certainly do not want to ever be associated with. Many of the “worst practices” are SQL Server specific, but a few like 13 – No requirements (database requirements) include a useful template or outline for a baseline set of requirements which can be generalized across database platforms. Highly recommended, this post is must read for SQL Server managers (DBA) and operations support staff responsible for optimizing database performance.

Managing ORACLE Statistics for Optimal Performance

Karen Morton has published a very well written paper on Managing Statistics for Optimal Query Performance available at Cary Milsap’s MethodR site (http://www.method-r.com). The paper discusses ORACLE’s use of statistics in the query optimizer. Her suggestions for a adopting a statistics collection strategy including dynamic sampling is good complement to an earlier post I wrote titled ORACLE Tuning – When the SQL can’t be modified. This paper also includes a terrific discussion of a “bind peeking” (ORACLE 9i and higher – optimizer behavior as it “peeks” into bind variables in the hard parse phase before query plan decisions are made). This is a topic I don’t see much written about. Her contribution to understanding the complexity of managing this behavior in the ORACLE optimizer is much appreciated.

Very useful and well written, the paper is available when you visit the Method R site (http://www.method-r.com/downloads) on a regular basis and bookmark this important collection of papers and presentations.

SQL Server Data Loading Performance Guide

Thomas Kejser, Peter Carlin and Stuart Ozer over at Microsoft have published a Data Loading Performance Guide you can find at http://msdn.microsoft.com/en-us/library/dd425070.aspx. This paper is a concise collection of techniques and methods for bulk loading large data sets into SQL Server (2005, 2008). Especially helpful was their discussion related to concurrent loading and reading methods using Bulk Load, NOLOCK Queries, and Read Committed Snapshot Isolation. Now that is something you don’t see discussed everyday <g>. Well done, all of us who manage SQL Server platforms thank you for this invaluable contribution.

Data Migration – A Process Cycle Approach

Every business has process cycles (order-to-cash, source-to-pay) which we have used for years to optimize work activity and drive out waste and inefficiency. Understanding these cycles in depth provides us the opportunity to readily identify any tasks or activities which fall into one of the following three categories:

- Value-added

This step in the process adds form, function, and value to the product for the customer. This is usually a specific deliverable (like an approved master data specification). - Non-Value-Added

This step does not add form, function, or assist in the finished goods manufacturing or delivery of product and services (for example, this may be conducting a design review with intermediaries or those who will not perform the actual work). - Non-Value-Added-But-Necessary:

This step does not add value, but is a necessary step in the final value-added product or service (this is usually associated with “keeping the books”, recording metrics – think of your PPM tool for example.)

2) and (3) naturally create waste…(which we will be solving for in any process improvement effort).

I think I will contribute my notion of the same in a typical Data Migration effort and see what happens (apologize for not breaking into pretty tables, each discrete process cycle is identified in underline, work activities follow):

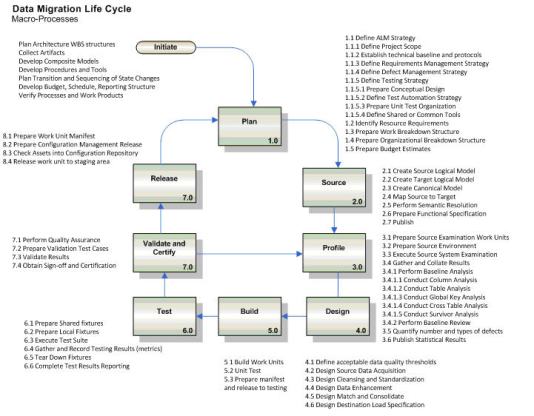

Plan to Act (PLAN)

Define ALM Strategy, Identify Resource Requirements, Prepare Work and Organizational Breakdown Structures,Prepare Budget Estimates

Design to Make (SOURCE)

Specification to Source

Create Source and Target Logical Model, Create Canonical Model,Map Source to Target, Perform Semantic Resolution, Prepare Functional Specification, Publish

Source to Solve (PROFILE)

Prepare Source Examination Work Units, Prepare Source Environment, Execute Source System Examination, Gather and Collate Results, Conduct Column, Table Global Key, and Cross Table Analysis, Survivor Analysis, Create Data Profiling Objects, Quantify number and types of defects, Publish Statistical Results

Solve to Assemble (DESIGN)

Define acceptable data quality thresholds, Design Source Data Acquisition, Cleansing and Standardization, Data Enhancement, Match and Consolidate, Destination Load Specification

Make to Deploy

Make to Test (BUILD)

Build Individual Work Units, Unit Test each Work Unit, Prepare manifest and release to testing

Test to Deploy (TEST)

Prepare Shared and Local Fixtures, Execute Test Suite, Gather and Record Testing Results (metrics), Tear Down Fixtures, Complete Test Results Reporting, Perform Quality Assurance (VALIDATE AND CERTIFY), Prepare Validation Test Cases, Validate Results, Obtain Sign-off and Certification

Deploy to fulfillment (RELEASE)

Prepare Work Unit Manifest, Prepare Configuration Management Release, Check Assets into Configuration Repository, Release work unit to staging area, Release to Production

Interested to hear what you think about this and welcome any thoughts or experience you may have about this common set of activities we run into every migration effort. And more importantly, what process improvements you have achieved in your efforts.

ORACLE Tuning – When the SQL can’t be modified

Many times we may encounter tuning or performance related problems where the underlying data access logic is buried or not available to us. The application may for example use an Object Broker or Query Server where SQL statements are simply unknown because they are generated at runtime and may change for each execution. The application’s query generator may be not able to correctly deal with specific constructs (for example, a restriction in the WHERE clause that cannot be resolved through the use of an index) that are used by many SQL statements. This is not the complete nighmare scenario there are still steps you can take to improve perfroamce. In this case, you may have to resort to techniques that solve the problem at the session or system level, not at the SQL statement level.

When you don’t have complete control of the SQL statements the application creates because the code is not available or is generated dynamically at runtime our tuning choices will probably be restricted to optimizing the data access structures (system) or modifying the execution environment (session). Recall the following can only be used to tune or optimize for specific SQL statements. They may not be applicable at the session or system level.

– Alter SQL Statements

– Use of hints

– SQL Profiles

– Stored Outlines

– SQL Plan Baselines

So what do we do?

The same tools Oracle provides that save hundreds of hours of labor intensive manual SQL tuning can also be used in this scenario to help at the system and session level where the code (SQL) is not available. Using these tools you can apply and measure global changes to the database first to potentially tune hundreds of queries at once. By failing to do global tuning first (for example using SQL with optimizer directives) you almost certainly find that subsequent global changes (e.g., optimizer parameter changes) may in effect “un-tune” carefully crafted individual SQL statements Start with system-level tuning and establish a baseline before beginning to tune poorly performing individual SQL statements generated on behalf of the application. This means you should carefully use the following methods executed in the following order.

– Tune Operating System, Disk I/O, and Network environment

– Gather and baseline ORACLE optimizer statistics

– Adjust cost based optimizer parameters and tune to meet site specific needs

– Optimize the Application database instance

– Perform Query Optimization and Tune the SQL Access workload

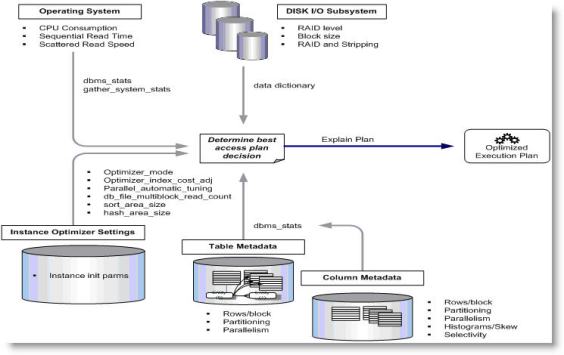

Your best friend in this case is the product’s Cost Based Optimizer model. The query optimizer is directly responsible for the performance of SQL statements The ORACLE optimizer cost model is illustrated in the following high-level diagram.

Note the sensitivity of the cost-based optimizer (CBO) cost model that accounts for I/O and CPU related data as well as table and index metadata. This is why it is important we tune the operating system, disk I/O, and network environment first and then gather and baseline ORACLE optimizer statistics. This cost model allows the optimizer to choose the best execution plans based on statistics of the underlying data. Decisions made by the CBO depend on the integrity of the statistics available at the time that the execution plan is parsed and executed. A common cause of poor performance is bad or outdated statistics in this scenario. Several useful tools for monitoring database performance and activity include the Active Workload Repository (AWR) and Automatic Database Diagnostic Monitor (ADDM). These tools can be used to pinpoint and isolate bad statistics that might be causing the optimizer to make bad decisions in preparing execution plans. Use the V$SYS_OPTIMIZER_ENV view to examine the current values for the optimizer related settings in the database instance. See the following URL for more information about this view:

http://download.oracle.com/docs/cd/B28359_01/server.111/b28320/dynviews_3080.htm

After understanding the common characteristics of the environment and application, then it is time to begin carefully optimizing the application database instance (there is a lot to this as well, will discuss this in a future post). Then and only then are you ready to perform what query optimization you can by tuning the SQL Access workload.

To summarize, the recommended approach to performance optimization for ORACLE when the application product doesn’t provide access to the underlying query engine is to use the following methods executed in the following order.

– Tune Operating System, Disk I/O, and Network environment

– Gather and baseline ORACLE optimizer statistics

– Adjust cost based optimizer parameters and tune to meet site specific needs

– Optimize the Application database instance

– Perform Query Optimization and Tune the SQL Access workload

Performing these steps in this order will maximize the value of the optimization effort and mitigate the risk of altering a global parameter change in the database that will impact the application when you don’t have access to the underlying codebase.

Method for an Integrated Knowledge Environment (Mike 2.0)

One of the more interesting sites I have kept an eye on is the open source Method for an Integrated Knowledge Environment better known as Mike 2.0 (http://mike2.openmethodology.org/wiki/MIKE2.0_Methodology). Initially created by a team from BearingPoint, the project started as the development of an approach for Enterprise Information Management in early 2005. Much of the content of the MIKE2.0 Methodology was made available to the Open Source community in late December 2006. The contributor base includes a number of individuals, from BearingPoint and from external community. The Data Governance and Management Consortium (DGMC) is being formulated to take ownership of MIKE2.0 and to lead the extension of many aspects of the methodology.

Sean McClowry is the overall lead for the MIKE2.0 Methodology. Sean wrote much of the Overall Implementation Guide, and was the core contributor on a number of MIKE2.0 Solution Offerings as well as the overall collaborative framework that hosts the MIKE2.0 Methodology. Andreas Rindler is the architect of the collaborative framework that hosts MIKE2.0 and primarily focuses on development of content related to Enterprise Content Management, Enterprise 2.0 and the Open Methodology Framework.

There is a wealth of material to explore on this site, you can spend days wading through the details and templates provided. As an example, I encourage you to see the Data Migration solutions offering found at http://mike2.openmethodology.org/wiki/Data_Migration_Solution_Offering and I think you will agree this is a terrific exposition on a subject area there is just not a lot written about. Of course there is much more to be done, but this represents an invaluable resource for all of us in this profession. Thank you Sean and Andreas for making this readily available to all of us, very much appreciate all the hard work you have put into this.

Data Profiling – Plan on it

It has always been a surprise that addressing data quality and profiling is not an activity I usually see planned in many data warehouse or migration projects. What is more bewildering is the assumption that multiple data sources will somehow magically transform themselves to meet “implicit canonical rules” and stakeholder expectations without investing the time and energy to ensure a common understanding about how to treat the inevitable anomalies and flat-out garbage data that has been spinning for years buried under a lot of application sleight-of-hand.

For example, note the typical flow of activities in a data migration project as represented in the following diagram.

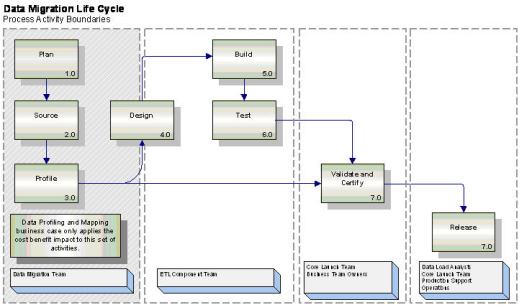

Planning for and adopting an automated data profiling and mapping set of work streams should be an integral part of the overall program, but this is unfortunately the exception rather than the rule. Unless the source data is pristine (yeah sure) across multiple source sinks want to guess what the result is? A lot of unnecessary rework, data quality issues, and dashed stakeholder expectations when the data values are revealed in the acceptance stage. Hoping the data can be sourced, mapped, and transformation rules applied in a paper exercise (or using Excel for more advanced organizations) represents a true failure to understand the opportunity. A more robust model would include planning assumptions to support and enable the following model assumptions about the fundamental processes needed to ensure success (or at least reducing guessing and defects we can expect). The major processes would include a model with the major elements identified in the following diagram.

Note how the profiling activity is used for both data mapping as well as the validation and certification processes with the customer. So the robust and more sensible model has evolved to meet an important and urgent need, reducing overall costs, and presenting real opportunities for compressing key process cycle time in the overall effort as illustrated in the following diagram.

So best practice should include the addition of a robust data profiling and mapping work streams to be used in a wide variety of front side activities as well as the validate and certification processes before release as the product approaches production. Will address the details of how this works and look forward to hearing from other hands-on practitioners who have experienced the power of this construct in their own practice.

The best of intentions…

Welcome to the data management professional blog. Will try to keep this as entertaining as possible and encourage all to contribute and share their personal and professional experiences in data management here. I will try to cover a number of topics ranging from master data management to analytics with a dash of common sense and business experience thrown in to preserve my reputation as a seemingly expensive, over-priced management consultant intact. This eclectic collection of posts will in many ways reflect the kind of chaos and wide variety of issues will all encounter in our daily professional lives dealing with data and by necessity will require a little patience from time to time. Just like our stakeholders, will try to contribute my understanding of the news, events, and invaluable contributions others have made to our profession. And, thank you for your interest, hope this will meet your expectations and you will become a regular reader and contributor.